botlab

Mapping the Way Out

Mapping the Way Out

The Botlab project integrates SLAM, path planning, and motion control to enable a wheeled robot to autonomously explore and escape structured environments with real-time visual feedback.

Co-Developer

OCT 2024 - DEC 2024

Justin Lu

Changhe Chen

This project develops a mobile robot capable of autonomous exploration, mapping, and navigation in structured environments such as mazes. Using LIDAR sensing, SLAM for real-time localization, and A* path planning, the system builds maps, estimates its pose, and computes efficient routes. A closed-loop control framework integrates odometry and sensor feedback to ensure precise motion and adaptability, demonstrating robust performance in navigating and escaping complex environments.

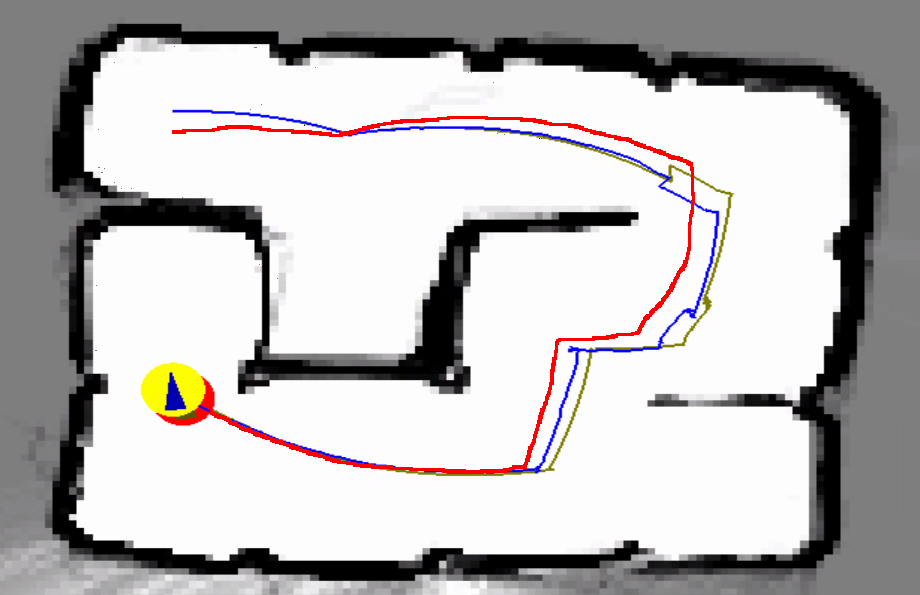

A particle-filter SLAM framework fuses LiDAR data with odometry to build occupancy grid maps in real time. Gaussian noise models capture uncertainty, while the sensor model aligns laser scans with map features. This enables accurate pose estimation and robust mapping under real-world conditions.

The MBot platform uses wheel encoders, an IMU, and gyrodometry to estimate position and orientation. A tuned PID controller ensures accurate velocity tracking and smooth motion, with calibration minimizing hardware asymmetries. At lower speeds, the robot follows paths precisely, demonstrating reliable waypoint tracking.

A state-machine based exploration module identifies frontiers—the boundaries between known and unknown regions—and autonomously drives the robot toward them. Once exploration is complete, the MBot returns to its home position. Continuous status updates and fail-safes ensure robust operation in dynamic environments.

All ROB-550 project teams consist of 3-4 individuals that work on an RX-200 robotic manipulator and the M-Bot over the duration of the semester.